The first step of any machine vision system is image acquisition. Image acquisition is the action of retrieving an image from a source, usually hardware systems like cameras, sensors, etc. It is the first and the most important step in the workflow sequence because, without an image, no actual processing is possible. In a machine vision system, the cameras are responsible for taking the light information from a scene and converting it into digital information i.e. pixels using CMOS or CCD sensors. The sensor is the foundation of any machine vision system. Many key specifications of the system correspond to the camera’s image sensor. These key aspects include resolution, the total number of rows, and columns of pixels the sensor accommodates.

The following are some fundamental features in a machine vision camera that should be considered:

CAMERA TYPE

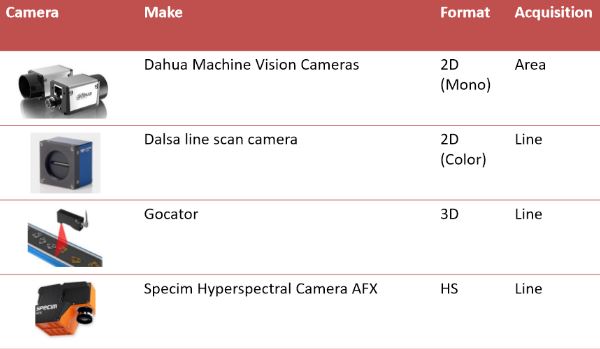

Based on the image format, cameras could be of three major types:

- 2D cameras

- 3D cameras

- Hyperspectral cameras

Based on the acquisition type, cameras could be classified into two major categories:

- Line Scan cameras

- Area scan cameras

INTERFACE

Since the output signal of cameras used in machine vision is digital, there is little information lost in the transmission process. With machine vision technology, imaging technology has also advanced to include a multitude of digital interfaces.

However, as with camera choices, there are no single best option interfaces, but rather one must select the most appropriate for the machine vision application. Asynchronous or deterministic transmission of data allows for transfer receipts. This guarantees signal integrity, placing delivery over timing due to two-way communication. In isochronous transmission, scheduled packet transfers occur. This mechanism guarantees to time but allows for the possibility of dropping packets at high transfer rates.

Related Article: The Ultimate Guide to Machine Vision Camera Selection

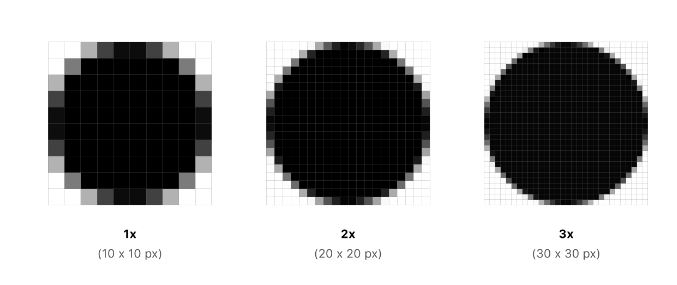

RESOLUTION

The number of pixels in a given CCD or CMOS sensor array defines the image resolution of a camera. This information can be found on a camera datasheet. It is usually shown as the number of pixels in the X-axis and Y-axis.

The application will determine how many pixels are required to identify the desired feature accurately. This also assumes a perfect lens that is not limiting resolving the pixel is used. In general, more pixels is better and will provide better accuracy and repeatability. However, more data demands more processing, which can significantly limit the performance of a machine vision system.

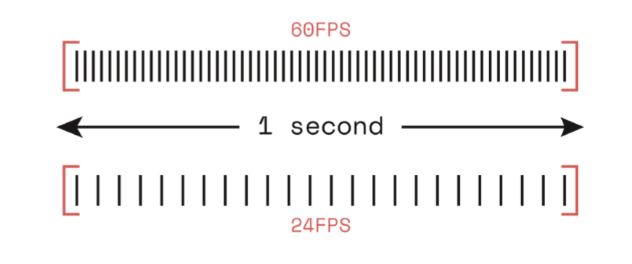

FRAME RATE

Like video cameras, industrial cameras output image sequences at a fixed rate. The camera’s speed is expressed, using the unit known as “images per second” or “frames per second”. In the context of video and machine vision, the terms frame and images are used interchangeably. Thus, the widely used unit “FPS” stands for “frames per second”. Usually, the higher frame rate cameras are more expensive.

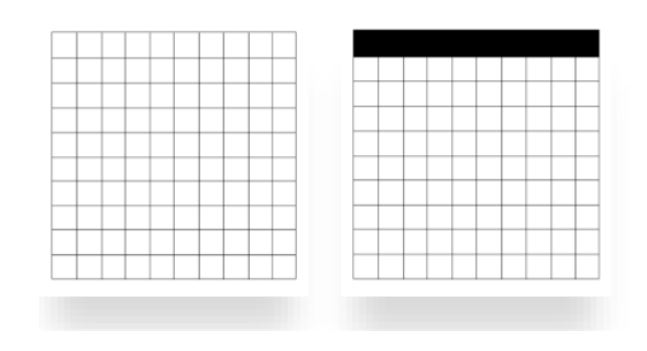

SHUTTER TYPE

Shutter, in imaging and photography, is a device through which the lens aperture of a camera is opened to admit light and thus expose the sensor. Cameras that use a global shutter (left) are capable of capturing the entire senor data all at once. Camera with roller shutter (right) scan across the sensor line by line.

SENSOR SIZE

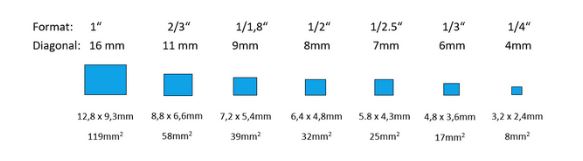

Classic cameras that are used in machine vision have varyingly large sensors, depending on the camera and resolution used. Most of the cameras that come equipped with smaller sensors are used with so-called C-mount or possibly CS-mount optics. The C-mount thread has a diameter of about 1 inch, or 25.4 mm, and a thread pitch of 1/32 inch.

The sensors used in standard cameras are clearly smaller and range from 4 to 16 mm image diagonal. These sensor sizes, too, are indicated in inches. The 1-inch sensor possesses a diagonal length of 16mm. The nomenclature of these sizes dates back to the time of television broadcast imagers.

QUANTUM EFFICIENCY

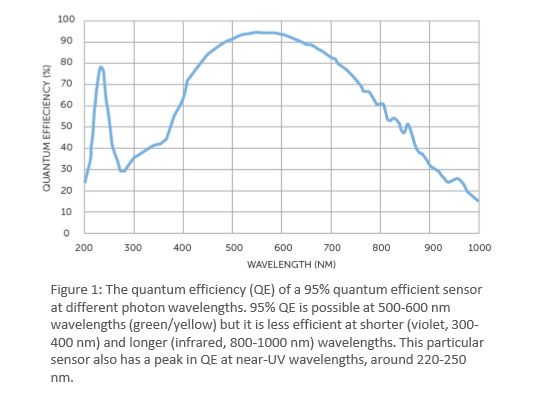

Quantum efficiency (QE) of both CCD and CMOS sensors can be calculated as a ratio of the charge created by the device for a fixed number of incoming photons. For example, if a sensor had a QE of 80% and was exposed to 100 photons, it would produce 80 electrons of signal for the exposure. The QE is usually plotted as a function of wavelength as it will change over different wavelengths. Usually, this reaches a maximum when green light of about 550 nm is present. This results in a measure for the sensitivity of the particular device.

The maximum QE of the camera is usually less than that of the sensor. This is primarily due to external optical and electronic effects.

CONCLUSION

Just as when considering resolution and other aforementioned, the cost is going to dictate what can be accomplished with a vision system. One way to approach the camera selection process is by working backward, with the cost being the first constraint.

Register For Our Upcoming Free Webinar